Title Story: How your devices listen, what they capture, and the simple steps that keep your conversations out of the algorithm’s ears.

Cybersecurity Tip of the Week: A 60-second microphone lockdown that shuts down silent tracking on your phone, browser, and smart speakers.

Cybersecurity Breach of the Week: North Korea’s phantom IT workforce infiltrates U.S. companies using stolen identities, AI, and remote-work blind spots.

Appearance of the Week: I joined host David Puner on the Cyberark Podcast to take a deep dive into the mindset and tactics needed to defend against today’s sophisticated cyber threats.

AI Trend Of the Week: I have to admit enjoying the newest AI tend that remixes iconic songs into a 1950s Motown Soul AI Cover. I share my favorite below.

Title Story

Is Your Phone Listening to You?

I spent years hunting spies—people who lived in the shadows and listened for a living. They were deliberate, patient, and skilled. Today, we’ve built devices that do the same thing, only faster, cheaper, and with better battery life.

We’ve all had the same experience: say the word “vacation” near your phone and suddenly Instagram thinks you’re dying to snorkel with turtles in Maui. Mention a weird product near your Alexa—dog wheelchairs, artisanal lawn gnomes—and, like magic, the ads appear on your Amazon feed. It feels like espionage. And in a way, it is.

Let’s start with the truth: your devices are listening. Not in the Hollywood sense where a bored tech analyst monitors your breakfast conversations. Instead, your phone, Alexa, Google Home, and even apps you barely remember downloading constantly scan for what they call wake words. “Alexa.” “Hey Google.” “Siri.” These systems monitor audio streams in real time, using AI to detect when they should pay attention. That means the microphone is open. Always.

Companies have spent real money perfecting this.

Amazon received patent number US8798995B1 for a “voice-sniffing algorithm” that detects keywords in ambient conversation and US10096319B1 that uses voice input to infer physical and emotional traits (e.g., sore throat, sickness, excited vs. sad mood). Google patented US8138930B1, a system to analyze background audio to target ads and US 2022/0246140 A1 that keeps a device in a continuous listening state to detect not only fixed but context-specific wake words. Facebook holds a patent (US 10,075,767) for technology that lets your phone record ambient audio during TV shows and ads, match it to hidden ultrasonic signals, and determine what you’re watching for ad targeting.

And then came the whistleblowers.

Bloomberg, The Guardian, Business Insider, and others reported that Amazon, Apple, and Google all used human contractors to review snippets of audio captured from their devices. Apple eventually suspended the program after public backlash. The recordings were supposed to activate only after wake words. They didn’t. Contractors allegedly heard fights, intimate moments, private medical issues. In the intelligence world, this is what we call “collateral collection”—the stuff you weren’t aiming for but scooped up anyway.

Then there’s a lesser-known trick: sonic beacons. These are high-frequency audio signals, inaudible to humans, that your phone can hear. Some apps use them to track whether your device has been near a specific TV commercial, store speaker system, or even another app. You’ll never know they were triggered. But your ad feed will.

And yes, apps absolutely use AI to scan for spoken keywords. You talk about “flights,” “deals,” “kayaks,” “back pain,” or “new boots,” and within hours, your digital world nudges you toward a purchase. Surveillance, not magic.

In 2018, a Northeastern University study tried to confirm whether phones secretly recorded audio without permission. They found no hard evidence of true “covert eavesdropping,” but they did find thousands of instances of apps sending screenshots, screen recordings, location data, and usage behavior without the user knowing.

Run your own countermeasures.

The 48-Hour Test: Pick a bizarre product you’ve never searched for. Say it aloud near your phone 10–15 times over two days. Don’t type it. Don’t search it. Just talk about it. Then see what pops up in your ads. Plenty of people have done this and watched the results roll in like clockwork.

So is your phone listening? Let’s put it this way: if an FBI operative behaved the way your apps do, we’d call it surveillance. And you gave them permission.

Cybersecurity Tip of the Week

THINK LIKE A SPY: Your 60-Second Microphone Lockdown

During my years running Ghost surveillance operations for the FBI, I learned that If there’s a microphone in the room, assume someone can turn it against you. Here’s how you protect yourself.

iPhone

Settings → Privacy & Security → Microphone

Kill access for Facebook, Instagram, TikTok, shopping apps, and any app that doesn’t need your voice.

App Privacy Report

Turn it on. Check who’s touching your mic.

Siri

If you don’t use hands-free, disable “Listen for ‘Hey Siri.’”

Android

Settings → Privacy → Permission Manager → Microphone

Revoke mic rights from anything nonessential.

Use the global mic kill switch in Quick Settings when you want real silence.

Browsers

Chrome / Firefox / Edge → Site Settings → Microphone

Default to Block. Clear any “Allowed” sites.

Smart Speakers

Use the physical mute button when the discussion isn’t for public consumption.

Review and delete voice history in the Alexa or Google Home app.

Locking down your microphones makes you harder to profile, harder to track, and a far less predictable data point. In my old line of work, that’s how you stay alive.

In your digital life, it’s how you stay private.

Don’t miss a newsletter! Subscribe to Spies, Lies & Cybercrime.

Cybersecurity Breach of the Week

North Korea’s Remote-Work Infiltration

North Korea has refined a new form of espionage: remote imposters. This week, the DOJ announced guilty pleas from five people who helped North Korean operatives infiltrate 136 U.S. companies by posing as ordinary IT workers. The facilitators supplied clean identities—some stolen, some real—and even hosted company-issued laptops in their homes so it appeared the workers lived inside the United States.

Once inside, the imposters performed just enough work to stay credible. In many cases, they used AI tools to complete tasks, allowing them to remain embedded longer while they quietly siphoned data, stole secrets, and collected U.S. paychecks. The scheme generated $2.2 million for the regime.

One participant was an active-duty Army service-member. Another ran a fake staffing company specializing in “certified” remote IT talent. A Ukrainian accomplice stole and sold U.S. identities to North Korean operatives, who used them to secure jobs at more than 40 firms.

In a world built on remote work, the weakest link might be your hiring pipeline. The fix isn’t complicated: strong background checks, real identity verification, and a second look at anyone who seems “too perfect” on paper.

A little diligence can stop a spy in sheep’s clothing.

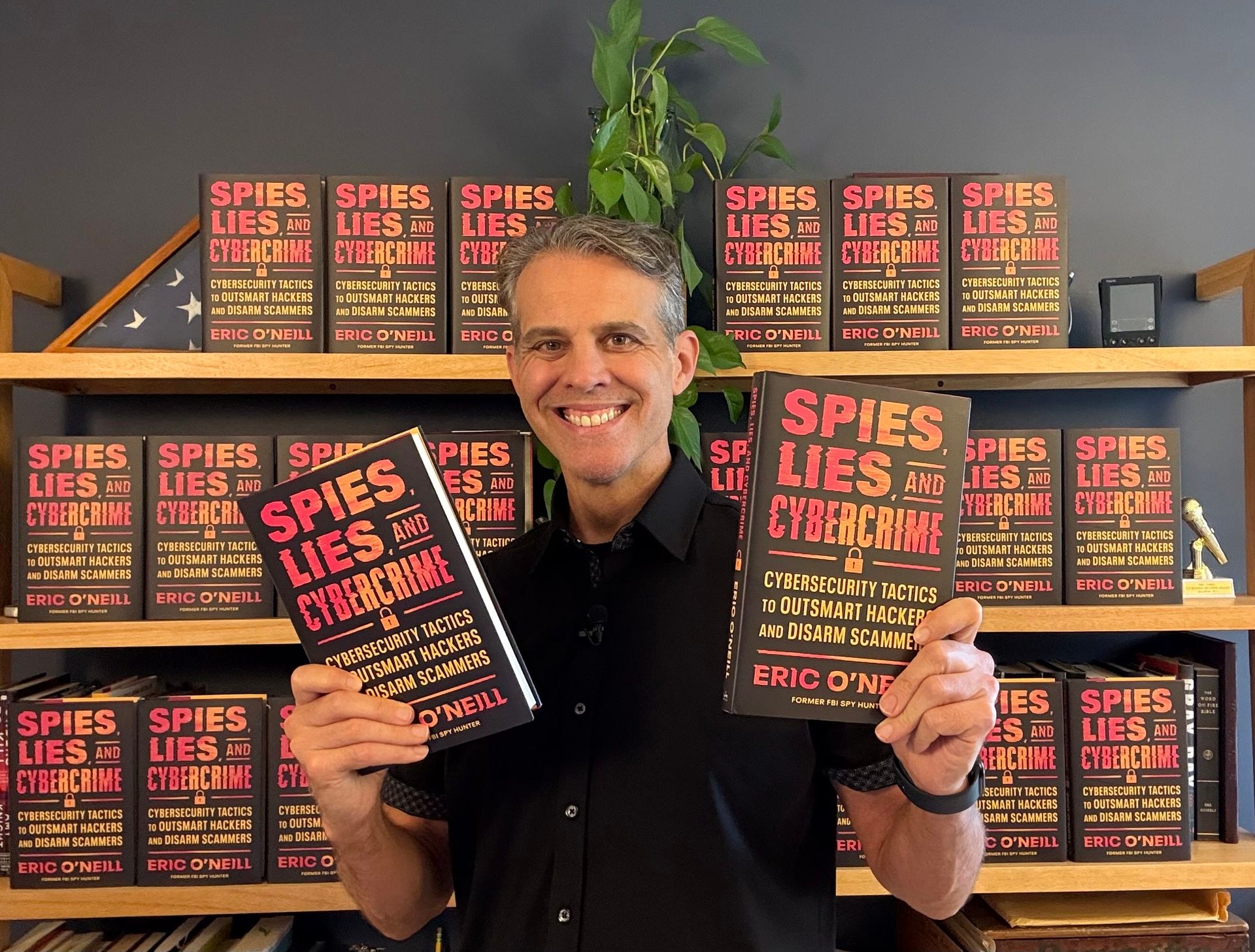

Get the Book: Spies, Lies, and Cybercrime

Thanks to all of you, my book, Spies, Lies, and Cybercrime, is an instant national bestseller and an Amazon #1 Bestseller.

If you haven’t already, please buy SPIES, LIES, AND CYBERCRIME. If you already have, thank you, and please consider gifting some to friends and colleagues. It makes a perfect holiday gift for anyone you want to protect from cyber scams.

Please share! Forward this email to your friends and family. Here's an easy-to-post cover graphic, here's a Linkedin post, here's an IG reel and a post.

📚 Get the book: https://ericoneill.net/books/spies_and_lies/

📺 Review the Book: https://www.amazon.com/Spies-Lies-Cybercrime-Cybersecurity-Outsmart/dp/0063398176/

📖 Get a Signed copy: https://www.kensingtonrowbookshop.com

🎤 Book me to speak at your next event: https://www.bigspeak.com/speakers/eric-oneill

Appearance of the Week

I joined host David Puner on the Cyberark Podcast to take a deep dive into the mindset and tactics needed to defend against today’s sophisticated cyber threats. We discussed how thinking like an attacker can help organizations and individuals stay ahead. The episode covers actionable frameworks, real-world stories, and practical advice for building cyber resilience in an age of AI-driven scams and industrialized ransomware.

AI Trend of the Week

I have to admit enjoying the newest AI tend that remixes iconic songs into a 1950s Motown Soul AI Cover. This remix of Eminem’s Without Me has got to be my favorite. And while you’re at it, join me over on Instagram.

Please support our sponsors by clicking below and giving them a look.

Realtime User Onboarding, Zero Engineering

Quarterzip delivers realtime, AI-led onboarding for every user with zero engineering effort.

✨ Dynamic Voice guides users in the moment

✨ Picture-in-Picture stay visible across your site and others

✨ Guardrails keep things accurate with smooth handoffs if needed

No code. No engineering. Just onboarding that adapts as you grow.

Like What You're Reading?

Don’t miss a newsletter! Subscribe to Spies, Lies & Cybercrime for our top espionage, cybercrime and security stories delivered right to your inbox. Always weekly, never intrusive, totally secure.

Let's make sure my emails land straight in your inbox.

Gmail users: Move this email to your primary inbox

On your phone? Hit the 3 dots at top right corner, click "Move to" then "Primary."

On desktop? Close this email then drag and drop this email into the "Primary" tab near the top left of your screen

Apple mail users: Tap on our email address at the top of this email (next to "From:" on mobile) and click “Add to VIPs”

For everyone else: follow these instructions